3.1 Offline-animation Implementation

The SkinnedMesh sample, that is part of the DirectX8 SDK, already

offers functions to load the mesh, its texture and animation sets

from X Files [Freid2001].The sample-code

makes use of a framework that handles device initialisation and shows

how to render a skinned mesh with software, hardware T&L indexed

and non-indexed vertex processing. The mesh and animation information

in the X Files is stored in frames, called sFrames here. Animation

is handled by linked lists of key frames for the given joint and numbers

of key-frames. To play an animation, the given function SetTime is

called with a time-value within a loop through all the frames in FrameMove.

while ((pdeCur != NULL) // pointer to current sub object (mesh)

{

pframeCur = pdeCur->pframeAnimHead; // current frame is head

of hierarchy

while (pframeCur != NULL)

{

pframeCur->SetTime(pdeCur->fCurTime); //go to timestamp in

the animation

pframeCur = pframeCur->pframeAnimNext; //advance to next bone

or joint

}

pdeCur = pdeCur->pdeNext; //advance to next sub object in the

x-file

}

To get the related frame number of the 3dsmax animation, we have

to multiply the current timestamp by 4800 and divide through 30. (

In case the animation is saved with 30 fps )

1/4800sec is the 3dsmax time unit. It is chosen to be a multiple of

the standard frame rates 24 (movies), 60 (NTSC) and 50 (PAL).

The SetTime function searches the corresponding matrix key to the

given timestamp at the current joint. If the timestamp lies between

two matrix keys, the key-frame that is closer in time to the given

value is displayed. This results in jumping and "jaggy"

animation at lower frame-rates, since the keys usually resemble positions

further apart in space. The same technique is used with scale and

rotation keys. To smooth the animation, interpolation between the

key-frames is necessary. A linear interpolation between the keys in

question happens to be the best solution to guarantee constant movement.

To enhance the basic animation functions and make them usable for

a real-time gaming application, it is not enough to play custom animations

forward and backward; other functions are needed as well.

One example for enhanced offline-animation is the blending of the

end of an animation with the beginning of a new one. Additionally

the interpolation is implemented either cubic or linear.

In a playing animation, the timing can be critical, so no additional

blending frames are wanted. In this example 4 original frames are

"lost" to guarantee the motion-flow.

The interpolated key-frames are generated "on the fly" and

not stored in memory.

3.2 Physic-based Implementation

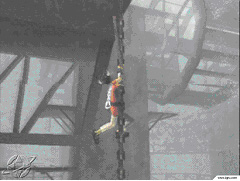

The current use of physic based animation in real-time applications

is limited to special problems like animating chains (Fig. 7) or vegetation.

But there are many more opportunities to breathe life into objects

[Walter1997].

Fig. 7: ICO's real-time IK chain

Due the recent availability of hardware transform & lightning,

we are now able to devote processor time to tasks other than environments.

There are no more excuses for bad character animation [Lander2000].

To achieve more life-like characters typical uses for procedural animations

are look-at constraints, secondary motion, inverse-kinematics, muscle-bulges,

chest-heaves, ponytails or cloth-animation.

3.2.1 Implementing physic based animation using bones animation

By modifying single bones in a skeleton system there is no more need

for re-calculating every single vertex for physic based animation

effects.

Let's imagine a snowboarder character mesh which arms should shake

when surfing over harsh snow underground. So we need an algorithm

which can parse itself through an hierarchical bone-structure and

modify the needed bone-positions. Since we want the "arm-shaking"

to be calculated on the fly, there is need for 2 kinds of parameters.

3.2.1.1 Initial parameters:

// PARAMETERS FOR COS-BASED factor ( factor = cosf(time*speed)*amplitude

m_fAnimProceduralSpeed =40.0f;

m_fAnimProceduralAmplitude =0.3f;

// PARAMETERS for relations between hierachies

m_fAnimProceduralAttenuation =0.3f;

// PARAMETERS FOR TRANSLATION ( e.g. transx*factor )

m_fAnimProceduralTransX=0.0f;

m_fAnimProceduralTransY=1.0f;

m_fAnimProceduralTransZ=0.0f;

// PARAMETERS FOR ROTATION ( e.g. rotx*factor )

m_fAnimProceduralRotX=0.0f;

m_fAnimProceduralRotY=0.1f;

m_fAnimProceduralRotZ=0.0f;

3.2.1.2 Runtime parameters:

// to fade out/in animation

m_fAnimProceduralFADE;

// type of animation (preset)

m_gAnimProceduralTYPE;

3.3 Approach:

First we calculate a value depending on playback speed and the maximum

allowed amplitude.

// calc speed & amplitude

m_fFactor=cosf(m_fTime*m_fAnimProceduralSpeed)*m_fAnimProceduralAmplitude;

Then the hierarchical level of the modified bone will be brought

into this calculation.

// increase attentutation for every hierachical level

if (m_fBoneModifier > 0.1f) m_fBoneModifier +=m_fAnimProceduralAttenuation;

if (m_fBoneModifier < 0.0f) m_fBoneModifier = 0.0f;

// calc final modifier including hierachical level

m_fFactor = m_fFactor*m_fBoneModifier*(1-m_fAnimProceduralFADE);

Finally we setup a translation and rotation matrix containing the

initial parameters and the appropriate runtime factors.

Those matrices are now multiplied with the original bone matrices,

resulting in a blend of offline-animated & physic-based bone position.